Search on Google Cloud Platform - Spanner

Forth article regarding topic Search on Google Cloud is devoted to Cloud Spanner. Idea is to explore various different products on Google Cloud Platform and how they could be used to implement search functionality for fictious eshop. So far, articles in serie are devoted to:

- App Engine and Search API (contains most of information and details which are not repeated in other articles)

- Cloud Datastore

- Cloud SQL

All code for this (and previous) example is in Github repository here.

To repeat most important information (which are described best in first article). Goal is to create search (autocomplete) functionality using Google Cloud Platform for eshop like website. I am using Google App Engine Flexible as web server with Flask framework to render main page and handle search requests and this case Cloud Spanner as database where I will store products information as well as execute queries. I am omitting description of few steps since it's written in detail in previous articles.

Cloud Spanner

Cloud Spanner is managed SQL database. Unlike other SQL databases, Spanner was developed straight in Google and has some very unique properties which makes it very special:

- Horizontally scalable

- Strong consistency

- Transactions

- High availability

- One node can provide up to 10,000 QPS of reads or 2,000 QPS of writes.

- Price is around 1$ per node per hour plus 0.3$ per GB per month, which means that starting price is 720$ per month per one node

- For production use and to be covered by SLA, at least 3 nodes are recommended.

- There are client libraries for specific programming languages to use Spanner

I won't go into details about all these properties, there are few interesting technical papers which explain in more depth how Spanner is designed and how it works: Google's Globally-Distributed Database, Spanner, TrueTime and the CAP Theorem.

Database model

All related to database is in file spanner_search.py, content is here:

from google.cloud import spanner

from db_settings import SPANNER_INSTANCE_ID

DATABASE_ID = 'eshop'

class SpannerSearch():

def __init__(self):

client = spanner.Client()

self.instance = client.instance(SPANNER_INSTANCE_ID)

database = self.instance.database(DATABASE_ID)

self.client = database

def init_schema(self):

"""Creates database for instance and table for products"""

database = self.instance.database(DATABASE_ID, ddl_statements=[

"""CREATE TABLE Products (

Sku INT64 NOT NULL,

ProductName STRING(1024) NOT NULL,

ProductNameCase STRING(1024) NOT NULL,

Price FLOAT64,

SalePrice FLOAT64,

Available STRING(64),

Url STRING(1024)

) PRIMARY KEY (Sku)

"""

])

operation = database.create()

operation.result()

def insert_bulk(self, input_data):

"""input is list of dictionaries. fields are sensitive to position in list"""

values = []

for item in input_data:

values.append((int(item['sku']), item['name'], item['name'].upper(), float(item['price']), float(item['sale_price']), item['available'], item['url']),)

with self.client.batch() as batch:

batch.insert(

table='products',

columns=('Sku', 'ProductName', 'ProductNameCase', 'Price', 'SalePrice', 'Available', 'Url'),

values=values

)

def search(self, query):

"""does search on table with LIKE operator. Number of returned results is set with LIMIT"""

db_query = """SELECT * FROM products WHERE ProductNameCase LIKE '%{}%' LIMIT 20""".format(query.upper())

output = []

with self.client.snapshot() as snapshot:

results = snapshot.execute_sql(db_query)

for row in results:

out = {

'value': row[1],

'label': row[1]

}

output.append(out)

return output

def delete_all(self):

"""deletes database (and table)"""

self.client.drop()I'm using official Spanner client library for Python. Table creation is done through DDL statement. Spanner at the moment doesn't have full text functionality as for example PostgreSQL so I tried to use current possibilities. One could be using REGEX like matching, second (which I used) is using LIKE operator.

Database setup

I use gcloud CLI from Google Cloud SDK to execute commands. First I'm gonna create Spanner instance with 1 node and select region.

gcloud spanner instances create myspanner --config=regional-us-central1 --nodes=1 --description="Test instance" Creating instance...done.

By executing script upload_products.py, data will be uploaded from csv file to Spanner database. Upload of 1.2 million of rows from my local computer took 6 minutes, which is very nice time. I was doing upload in batches containing 2000 items per insert.

Load testing

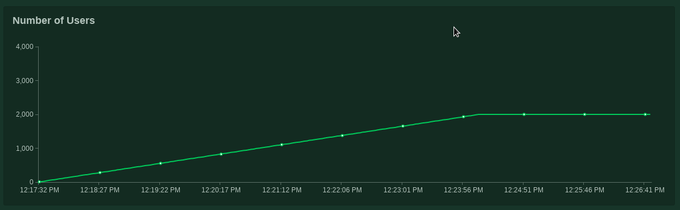

I was doing usual load testing as in previous aticles, using Locusts framework running on Google Kubernetes cluster. Step by step procedure how to setup cluster and deploy in in Github repository. For purpose of load testing, I set minimum number of GAE Flex instances to 10 (with autoscaling), since they take time to spin up. I was running up to 2000 users with hatch rate 5 users per second. Here are screenshots from Locusts UI:

As it can be seen from table, it made total ~236000 requests with response median time 46ms and average response time 194ms.

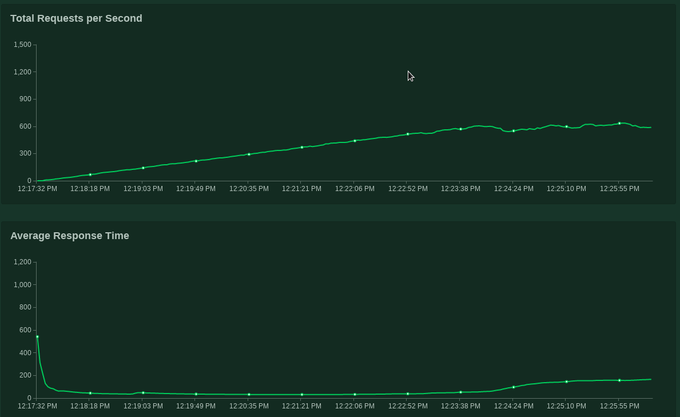

Spanner offers some monitoring graphs as well.

As it can be seen, under maximum load of ~550 request per second (which corresponds to Spanner's read operations graph), CPU utilization was ~20% (right side of chart) for this one node instance.

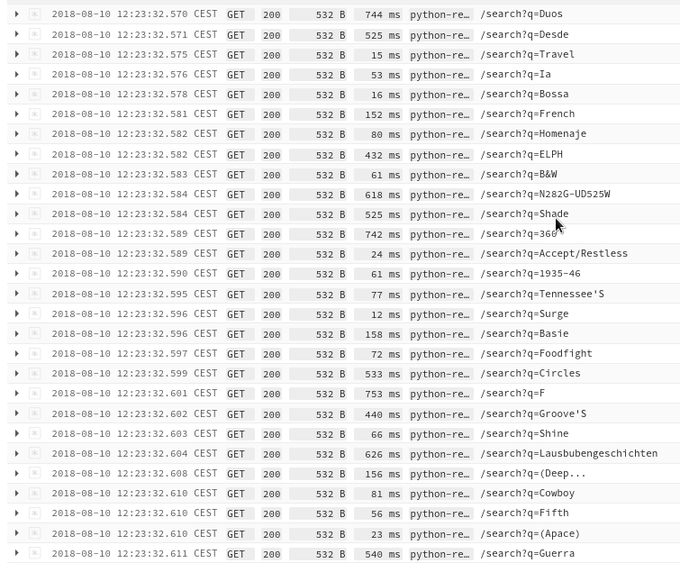

Here is sample of logs from GAE.

Conclusion

Cloud Spanner is by no means database for pet project but serious business which is reflected by price as well as features like multi regional support and horizontal scaling. Currently Spanner doesn't support full text search so depending on the case, using for example LIKE operator could be sufficient.