Search on Google Cloud Platform - Cloud SQL

Continuing series on topic "Search on Google Cloud Platform", in this article I'll explore Cloud SQL as storage, namely PostgreSQL built in functionality for text search. Previous articles / solutions in this series are:

- Search on Google Cloud Platform - App Engine and Search API

- Search on Google Cloud Platform - Cloud Datastore

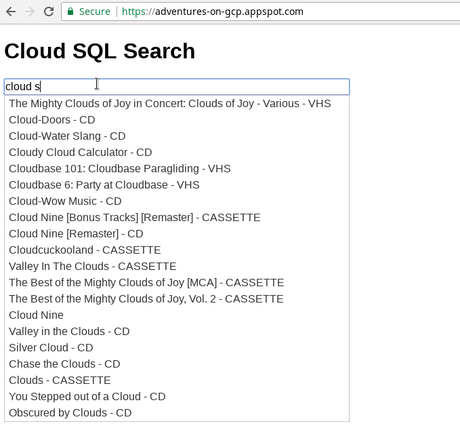

I'll repeat task description from previous articles: "Create autocomplete functionality on Google Cloud Platform for eshop so that when user searches some text it returns products name which contain that text". As a real life I am using Best buy data of their products. Webserver which renders search page and results is done in Flask framework (Python) and deployed on Google App Engine Flexible. Reason why I am using Flexible and not Standard is because at this time, it's not possible to connect to Cloud SQL PostgreSQL from GAE Standard.

Code for this project is in Github repository.

Google Cloud SQL

Cloud SQL was introduced in 2011 first supporting MySQL and in 2017 support for PostgreSQL was introduced and from now I will use usage and properties of Cloud SQL in context of PostgreSQL. Cloud SQL is fully managed database which means that server and disk storage are automatically provisioned. It has also aditional features like:

- Automatic backups, it's possible to define daily window when backups should occur.

- Automatic storage increase, so it shouldn't happen that you run out of disk space

- High availability through replicas in different zones

- Currently supports PostgreSQL 9.6, official version at the moment is 10 and 11 is in beta

- Custom configuration of CPUs and memory, starting from 1 shared CPU and 0.6GB RAM up to 64 vCPUs and 416 GB of RAM memory

- Storage capacity is ranging from 10GB up to 10TB

- Bare minimal configuration starts from ~10$ per month, other services like automatic backups, replicas costs extra of course

- Database migration from onpremise or another cloud provider

- Network access rules, it possible to define IP addresses (ranges) which have access to database instance, or it's possible to use cloud sql proxy https://cloud.google.com/sql/docs/postgres/sql-proxy

Database setup

I will use Google Cloud SDK to do command line operations, so first I will create database instance called "myinstance":

gcloud sql instances create myinstance --database-version=POSTGRES_9_6 --cpu=1 --memory=3840MiB

Created [https://www.googleapis.com/sql/v1beta4/projects/adventures-on-gcp/instances/myinstance]. NAME DATABASE_VERSION LOCATION TIER ADDRESS STATUS myinstance POSTGRES_9_6 us-central1-a db-custom-1-3840 35.232.43.115 RUNNABLE

Note: if you don't have set zone and region in your configuration, you can set it there or you can use --zone and --region flag to specify region and zone where database instance should be.

Note2: It good to have web server and database instance in the same zone for best performance / smallest latency.

Next step is to create database in database instance:

gcloud sql databases create eshop --instance=myinstance Creating Cloud SQL database...done. Created database [eshop]. instance: myinstance name: eshop project: adventures-on-gcp

Default user postgres is automatically created which I will use for this example, but I need to set password for it.

gcloud sql users set-password postgres --instance myinstance --host=% --prompt-for-password

Database model

All stuff related to database is in file sql_search.py. It's worth mentioning that I am using SQLAlchemy as ORM and SQLAlchemy-Searchable integrates PostgreSQL's full text search into SQLAlchemy models. Content of sql_search.py file:

import os

import sqlalchemy

from sqlalchemy import create_engine, Column, String, Float, BigInteger

from sqlalchemy.orm import sessionmaker

from sqlalchemy.ext.declarative import declarative_base

from sqlalchemy_searchable import make_searchable

from sqlalchemy_utils.types import TSVectorType

from sqlalchemy_searchable import search

from sqlalchemy_searchable import sync_trigger

from db_settings import USERNAME, PASSWORD, DB_NAME

SQLALCHEMY_DATABASE_URI = os.environ['SQLALCHEMY_DATABASE_URI']

if not SQLALCHEMY_DATABASE_URI:

SQLALCHEMY_DATABASE_URI='postgresql+psycopg2://{USER_NAME}:{PASSWORD}@127.0.0.1:5431/{DATABASE_NAME}'.format(

USER_NAME=USERNAME, PASSWORD=PASSWORD, DATABASE_NAME=DB_NAME

)

# these are some necessary lines

Base = declarative_base()

make_searchable(Base.metadata)

engine = create_engine(SQLALCHEMY_DATABASE_URI, echo=True)

sqlalchemy.orm.configure_mappers()

Session = sessionmaker(bind=engine)

session = Session()

class Product(Base):

"""Table for products"""

__tablename__ = 'products'

sku = Column(BigInteger, primary_key=True)

product_name = Column(String)

price = Column(Float)

sale_price = Column(Float)

type = Column(String)

url = Column(String)

image = Column(String)

available = Column(String)

search_vector = Column(TSVectorType('product_name', )) # this field is for full text search

def __repr__(self):

return self.product_name

class PostgreSQLSearch():

def init_schema(self):

"""creates table"""

# configure_mappers()

session.commit() # with out this line, Flask hangs for some reason

Base.metadata.create_all(engine)

sync_trigger(engine, 'products', 'search_vector', ['product_name'])

def delete_all(self):

"""deletes table"""

session.commit() # with out this line, Flask hangs for some reason

Product.__table__.drop(engine)

def insert_bulk(self, product_data):

"""creates multiple row (objects) and commits into database"""

objects = []

for product in product_data:

if product.get('name', ''):

product_db = Product(sku=product['sku'], product_name=product['name'], price=product['price'],

url=product['url'], type=product['type'], available=product['available'],

sale_price=product['sale_price'], image=product['image']

)

objects.append(product_db)

session.bulk_save_objects(objects)

session.commit()

def search(self, search_query):

"""making query to database"""

query = session.query(Product)

query = search(query, search_query, sort=True)

results = query.limit(20).all()

output = []

for item in results:

out = {

'value': item.product_name,

'label': item.product_name,

'sku': item.sku

}

output.append(out)

return output

if __name__ == '__main__':

db_client = PostgreSQLSearch()

db_client.init_schema()

I defined class Product with some fields and special field is search_vector where is defined vector for text search and fields that should be indexed. So when instance is saved (row inserted) it automatically processes text from product_name and fills search_vector field. Other than that, there is nothing much special, I created methods for bulk uploading, querying, creation and deleting table.

Uploading data

We have 1.2M rows of product data and since SQLAlchemy searchable works only when objects are save through SQLAlchemy, straightforward import of massive csv file is not possible unfortunately. That's why I wrote script to create and save objects into database (which is not most efficient way, but I guess I don't have a choice). Other thing is that since I want it to be as fast as possible, I'll create server in the same zone. (try to upload from your local computer and you'll notice big difference, especially if database instance is in the other half of planet).

To create Google Compute Engine instance, command is:

gcloud compute instances create instance-1 --scopes=sql-admin --preemtible Created [https://www.googleapis.com/compute/v1/projects/adventures-on-gcp/zones/us-central1-a/instances/instance-1]. NAME ZONE MACHINE_TYPE PREEMPTIBLE INTERNAL_IP EXTERNAL_IP STATUS instance-1 us-central1-a n1-standard-1 10.128.0.4 35.206.91.84 RUNNING

I'm adding specific scope for compute engine so it can be authorized to use Cloud SQL and I'm using preemtible since this will take less than hours and... every cent counts.

I'll copy code whole of repository since inside of it I have my csv file with products data. This can take few minutes since there are many files, simpler would be to zip it and copy just one file and then unzip it.

copy folder from local compute to server:

gcloud compute scp --recurse gcp-search instance-1:~/

ssh into server

gcloud compute ssh instance-1

installing pip so I can install rest of Python's dependencies:

sudo apt-get install python-pip

go to folder cloud_sql/webapp on server and install python dependencies:

pip install -r requirements.txt

finally check if variable PRODUCTS_LOCAL_PATH in settings.py in root of repository reflects path on server, if not update it.

Other important part is to handle connection to database instance. Like I wrote in the beggining, one possibility is to allow specific IP address (of our server) to have access, or to use proxy for Cloud SQL. I'll use second option. First step is to download binary for proxy with command:

wget https://dl.google.com/cloudsql/cloud_sql_proxy.linux.amd64 -O cloud_sql_proxy

change it to be executable:

chmod +x cloud_sql_proxy

we need to get "instance connection name" for cloud sql database instance:

gcloud sql instances describe myinstance backendType: SECOND_GEN connectionName: adventures-on-gcp:us-central1:myinstance databaseVersion: POSTGRES_9_6 ...

second line (connectionName) contains information we need. Connection name is usually in format <project-id>:<region>:<instance-name>.

it's good idea to open another terminal and ssh to server instance and run proxy with following command:

./cloud_sql_proxy -instances=adventures-on-gcp:us-central1:myinstance=tcp:5431 2018/08/08 12:56:58 Listening on 127.0.0.1:5431 for adventures-on-gcp:us-central1:myinstance 2018/08/08 12:56:58 Ready for new connections 2018/08/08 12:58:31 New connection for "adventures-on-gcp:us-central1:myinstance" 2018/08/08 12:58:37 Client closed local connection on 127.0.0.1:5431

Now in another terminal window you can execute first scipt (from folder webapp) to create table in database where we will upload data:

python sql_search.py

and other command to start uploading process (from folder cloud_sql)

python upload_data.py

Hopefully there will be no errors, script prints timestamp for every 10000 inserts. Whole process took for me around 15 minutes.

Everything regarding database is now done, it's possible to delete GCE server

gcloud compute instances delete instance-1 The following instances will be deleted. Any attached disks configured to be auto-deleted will be deleted unless they are attached to any other instances or the `--keep-disks` flag is given and specifies them for keeping. Deleting a disk is irreversible and any data on the disk will be lost. - [instance-1] in [us-central1-a] Do you want to continue (Y/n)? Deleted [https://www.googleapis.com/compute/v1/projects/adventures-on-gcp/zones/us-central1-a/instances/instance-1].

Web server deployment

Web server is basically same as in previous articles, simple Flask application with some endpoints which I will deploy on Google App Engine. There are specific configurations which are needed to be set in app.yaml file for GAE regarding Cloud SQL connection. This is how app.yaml looks life.

runtime: python

env: flex

entrypoint: gunicorn -b :$PORT main:app

runtime_config:

python_version: 3

env_variables:

# Replace user, password, database, and instance connection name with the values obtained

# when configuring your Cloud SQL instance.

# postgresql+psycopg2://<username>:<password>@/<database name>?host=/cloudsql/<instance connection name>

SQLALCHEMY_DATABASE_URI: postgresql+psycopg2://postgres:mypass@/eshop?host=/cloudsql/adventures-on-gcp:us-central1:myinstance

beta_settings:

# cloud_sql_instances: <instance connection name>

cloud_sql_instances: adventures-on-gcp:us-central1:myinstanceI use environmental variable SQLALCHEMY_DATABASE_URI to distinguish connection configuration when I use database locally (through proxy) and on GAE (used in sql_search.py).

beta_settings is specific setting which also needed and contains instance connection name

If those variables are set, application is deployed with command:

gcloud app deploy app.yaml --promote

This make take some time (sometimes even 10 minutes) since Docker image is created and deployed.

You can check application on url http://<project-id>.appspot.com

Load testing

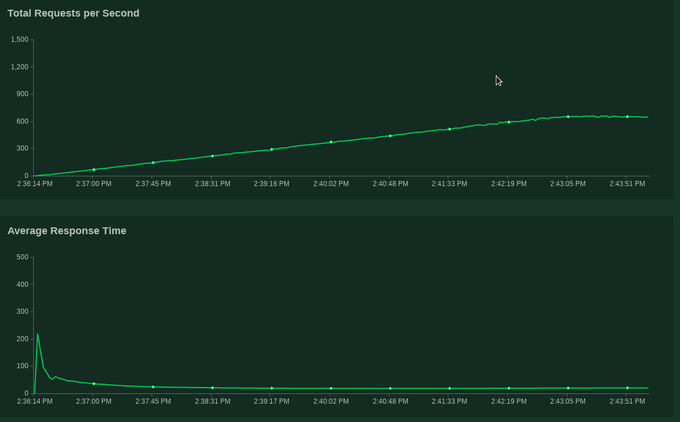

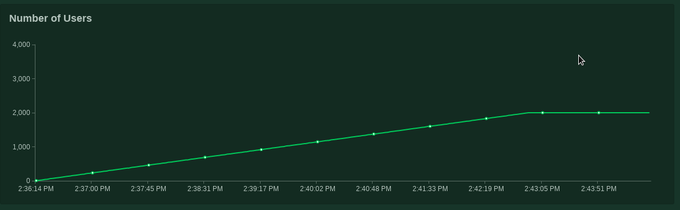

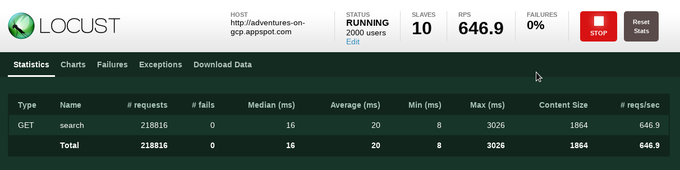

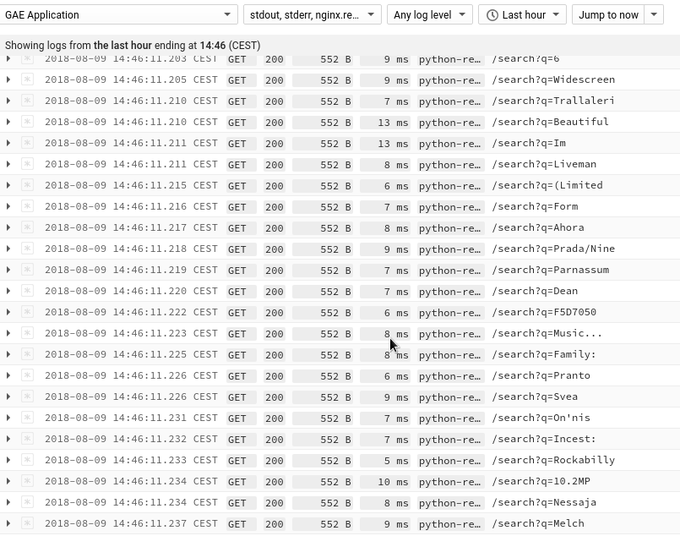

Since everything is working ok, as in previous cases I am doing load testing using Locust framework deployed on Kubernetes cluster. More details are here https://www.the-swamp.info/blog/search-google-cloud-platform-app-engine-and-search-api/ (under Load Testing) and how to set up cluster here https://github.com/zdenulo/gcp-search/tree/master/load-testing. For the testing purposes, I deployed GAE app with 10 instances already running (with autoscaling) since GAE Flex is much slower than GAE Standard in terms of spinning new instances, I didn't want that autoscaling influences results.

I was running up to 2000 users with hatch rate 5 users per second. As it can be seen from graph, test lasted about 10 minutes, there were no server errors or similar, in the end I think it spin up 13 instances altogether. In total there were 218816 requests with median response 16ms and average 20ms which makes it fastest solution so far. Worth noting is that database server had peak CPU load around 30%.

Conclusion

Since PostreSQL is battle tested and one of the most popular open source SQL databases and provides lots of additional features, it can be great choice to be used for eshops database storage or similar. Cloud SQL on top of that provides managed features like possiblity to easily scale server instance, automatic backups, high availability.