Executing Jupyter Notebooks on serverless GCP products

Jupyter Notebooks are a great tool for data science & analysis. They are developed on Jupyter Notebook servers which can be installed and run locally for development. It usually starts with exploring, developing, making prototypes, playing with the data. For example, exploring Covid data loaded as a CSV file from Our World in Data. You see there are the whole World stats and you would like to just track that data and every day write it into BigQuery table :) Such a notebook could look like this:

Ok, so you've done with development, you've tested and it works ok, so now you want to set some daily task to run it daily. Since this is not a complex task regarding computing consumption, it can be deployed on some serverless service, such as Cloud Run or Cloud Functions, because these products have a pay-per-use billing and gracious free tier so you probably won't pay for it anyway. I'll use library Papermill which supports the execution of Jupyter Notebooks as well as the support of input parameters.

The full Github repository is here.

There are a few steps involved to set this:

1. Wrap execution in a web application

Jupyter Notebook will be executed within an HTTP request, so I am adding Flask as a lightweight web framework to wrap execution. Executing a notebook with Papermill is also straightforward. Since the "date" is something that will be changed and used as an input variable in the notebook, it needs to be passed in the "parameters" variable. Input date can be passed as a parameter in a request or if it's missing, the "yesterday' date is used. Code for such web app looks like this:

import datetime

import logging

from flask import Flask

import papermill as pm

app = Flask(__name__)

@app.route('/')

def main(request=None):

logging.info("starting job")

input_date = ''

if request:

input_date = request.args.get('input_date', '')

if not input_date:

input_date = (datetime.datetime.now() - datetime.timedelta(days=1)).strftime('%Y-%m-%d')

parameters = {

'date': input_date

}

pm.execute_notebook(

'covid_stats.ipynb',

'/tmp/covid_stats_out.ipynb',

parameters=parameters

)

logging.info("job completed")

return 'ok'

if __name__ == '__main__':

import os

port = os.environ.get('PORT', '8080')

app.run(port=port)

Papermill execute_notebook function takes as input parameters input and output notebook as well as notebook "parameters". I'm saving the output notebook into the "/tmp" folder since in Google Cloud serverless products "/tmp" is usually the only place where you can write files.

2. Set parameters in the notebook

For Papermill to recognize input parameters in the notebook, a special cell that will contain parameters needs to be created. The second important thing that needs to be done is to add for this cell tag "parameters". This can be done in several ways depending also if you are using Jupyter or JupyterLab:

First, select the cell with the input variables

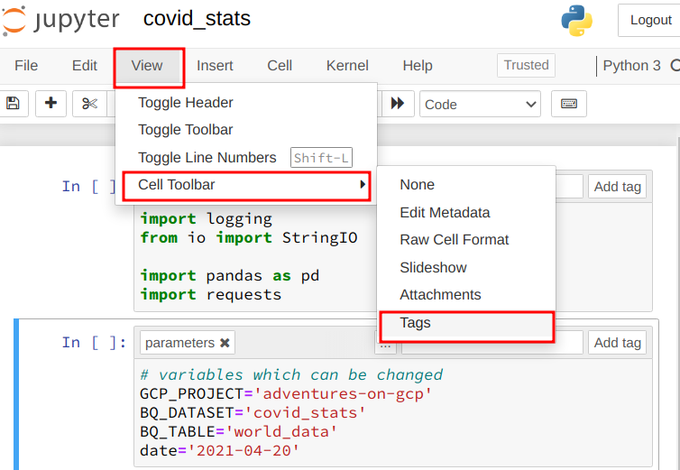

If using Jupyter,click on View -> Cell Toolbar -> Tags. You'll see on top of the cells in the right cornet text field where you can enter a tag or in the top left corner of the cell existing tags.

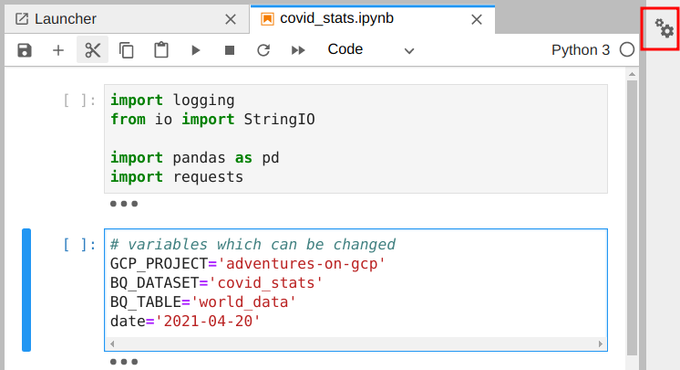

In case you are using JupyterLab, on the top right corner click on settings,

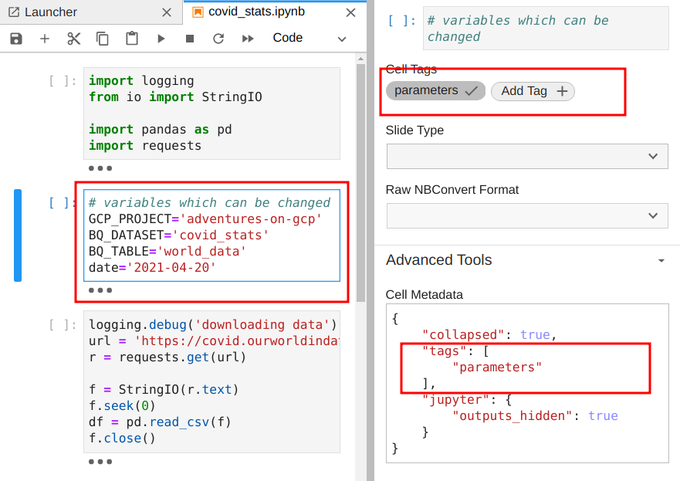

Then click on Add tag button and type "parameters". Another way is to write straight into Cell Metadata in JSON format.

3. Deployment

Like I mentioned in the beginning there are several options where this code can be deployed. Since this is a simple, not much time and resource-consuming task, we can deploy to basically any serverless compute product on Google Cloud.

Cloud Functions

bash script for deployment could look like this:

#!/bin/bash

# set GCP_PROJECT (env) variable

CLOUD_FUNCTION_NAME=cf-jn

gcloud functions deploy $CLOUD_FUNCTION_NAME \

--project $GCP_PROJECT \

--entry-point main \

--runtime python37 \

--trigger-http \

--memory 512Mi \

--timeout 180 \

--allow-unauthenticated

Cloud Run

deployment script

#!/bin/bash # set GCP_PROJECT (env) variable CLOUD_RUN_NAME=cr-jn gcloud beta run deploy $CLOUD_RUN_NAME \ --project $GCP_PROJECT \ --memory 512Mi \ --source . \ --platform managed \ --allow-unauthenticated

I'm leaving out App Engine since it requires an extra config file :) but the deployment command is similar. I set unauthenticated access in both deployments, which means that they are accessible from the whole internet, in real life of course it would be good that it's accessible for certain accounts only.

Conclusion

Jupyter Notebooks are usually used to work with large data sets and thus require a large amount of RAM memory and CPU resources and require a dedicated virtual machine for that. On the other hand, there are cases when that's not the case, so deploying and executing them on some of Google Cloud serverless compute products make perfect sense since it hides resource provisioning, provides simple deployment and bottom line saves money,

Lastly, on Google Cloud, Cloud Scheduler could be used to periodically (daily) invoke deployed web applications with HTTP requests.