Search on Google Cloud Platform - Cloud Datastore

In previous article I described simplified solution of how to implement search functionality (for eshop or similar) using Google Cloud Platform Products, namely using Search API which is part of Google App Engine Standard. In this article I will describe solution using Cloud Datastore as storage. Most of introductory explanation is written in previous article, thus I will focus here only on describing implementation of search functionality.

Just for reminder, task description goes like this: "Imagine you are eshop and you want to implement autocomplete of you product description functionality, so when users type in search box some words, they will get products which contains those words. How would you do it on GCP to be scalable, fast etc..."

Repository for this as well as previous example is on Github.

Cloud Datastore

Cloud Datastore is NoSQL database which was first introduced as part of Google App Engine in 2008 and then in 2013 as standalone product on Google Cloud. Since it's NoSQL, that means it's scalable but with some restrictions in comparison with SQL databases. Most notably related to text search is the fact that there is not possible to do "LIKE" or Regular Expression query which does partial text matching and would be suitable for our text search. That means that if you want to query and do text search it needs to be exact match. This implies that there will be needed some customization and manual work to prepare data to be searchable.

Some properties of datastore:

- it's NoSQL which implies some limits:

- for every query you want to execute there needs to be index

- supports transactions

- basically no limit of storage

- pay for use model: 50k reads for 0.06$, 20k writes for $0.18, 20k deletes for $0.02 and storage 0.18$ per 1GB with free daily quota.

- there is emulator for local development

- there are client libraries for some programming languages for access or it can be access from Google App Engine Standard.

Model

I am using again Google App Engine Standard with Python. Like I mentioned, Datastore API is part of GAE SDK, i.e. it's straightforward to use it and expecially with NDB library (there is also official python client library https://googlecloudplatform.github.io/google-cloud-python/latest/datastore/client.html which can be used anywhere). So database model I created looks like this:

class Product(ndb.Model):

"""Datastore model representing product"""

partial_strings = ndb.StringProperty(repeated=True)

product_name = ndb.StringProperty()

price = ndb.FloatProperty()

url = ndb.StringProperty()

type = ndb.StringProperty()

def _pre_put_hook(self):

"""before save, parse product name into strings"""

if self.product_name:

product_name_lst = regex_replace.sub(' ', self.product_name.lower()).split(' ')

product_name_lst = [x for x in product_name_lst if x and len(x) > 2]

self.partial_strings = product_name_lst

@classmethod

def search(cls, text_query):

words = text_query.lower().split(' ')

words = [w for w in words if w]

query = cls.query()

for word in words:

query = query.filter(cls.partial_strings == word)

return query.fetch(20)

@classmethod

def create(cls, item):

"""Create object"""

key = ndb.Key(cls, int(item['sku']))

obj = cls(key=key, price=float(item['price']), product_name=item['name'], url=item['url'], type=item['type'])

return objI added few extra fields like price, type, url... Like I wrote text search in Datastore needs extra customization to work properly, so here are the steps:

- in pre_put_hook method (it's executed before saving instance) I to do basic text splitting. I split product name with space and I remove words whose length is lower than 2 since I don't want to query those words. I am setting this list of words into variable partial_strings. Datastore allows having fields which contains list of objects and these fields can be queried on content in that list.

- in create method I am using sku as id (key) for entities.

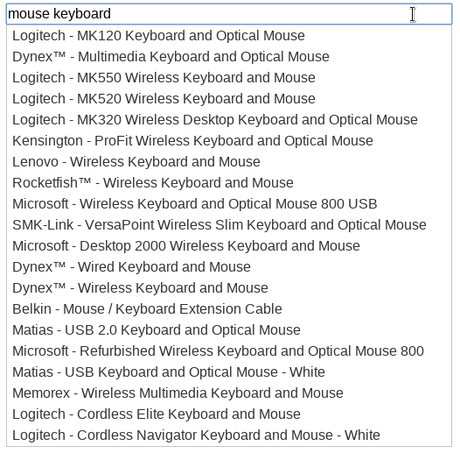

- in search method I split text into words and dynamically create database query and filter to fetch entities. So for text "mouse keyboard" database query would look like this: Product.query(Product.partial_strings == "mouse", Product.partial_strings == "keyboard") and would return Products which have in name both words "mouse" and "keyboard" (or some other words). The way it is done now is that it matches exact words. Of course with few lines of code it would be possible to tokenize words in product name so that it would match partial words. Something like this is could work:

name = "mouse keyboard" partial_strings = [] for word in name.split(' '): for i in range(3, len(word) + 1): partial_strings.append(word[0:i]) print partial_strings ['mou', 'mous', 'mouse', 'key', 'keyb', 'keybo', 'keyboa', 'keyboar', 'keyboard']So now it would match also text "mou", i.e. allowing more sensitive search.

Regarding backend and data upload, everything is more or less the same as in previous article. Uploading of 1.2M rows from csv file took 2.5 hours, I did it similar way as with Search API, althought for Datastore there are no restrictions regarding frequency of operations. It could be faster if I used concurrent requests, but I didn't bother with that this time.

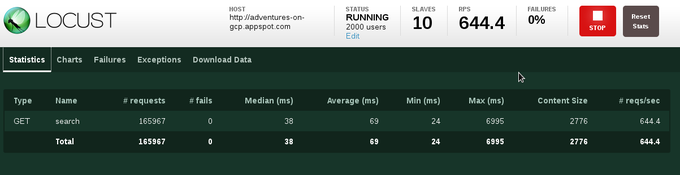

Load testing

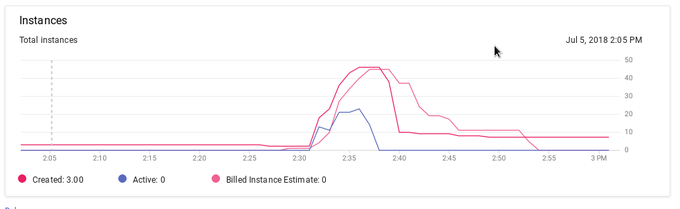

Using same configuration like in previous example, 2000 users with 10 users per second hatch rate took few minutes to reach and still running for a few minutes with max number of users. It made ~165k requests with Median 38ms and Average time 69ms. Overall Datastore looks like faster storage than Storage API. This could be related to the fact that fetching time of Datastore is related to the number of objects it's retrieving and if it doesn't retrive any or few it could be faster. After tests I realized that during upload I removed alpha numeric characters and I used those as input in load test for which cases it didn't return results. Of course also in this case, some caching could speed up response.

Graph displaying total numbers per second and development of average response time.

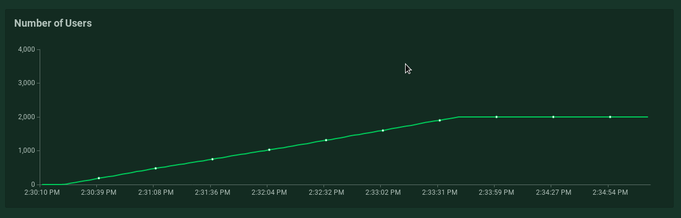

And number of users through time.

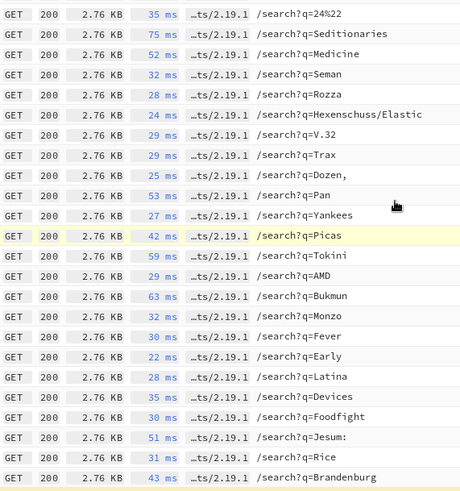

Logs

Google App Engine instance number through out the test.

Total costs for this test were ~4$. All is Datastore related, 1.07M Write operations for 1.89$ and 3.6M Read operations for 2.13$, App Engine was for free and load test Kubernetes Cluster I didn't count.

In conclusion: Datastore is viable choice for text search although with limited text search capabilities but offers easier integration with other probable database models.