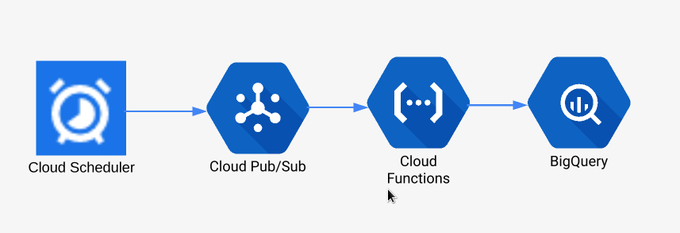

Simple serverless data pipeline on Google Cloud Platform

In one of my previous articles I wrote about creating data pipeline to get regularly information about BigQuery public datasets and inserting them into one BigQuery table. In this article I want to describe how it is designed. It's not rocket science, but rather example how to create serverless pipeline. Everything runs on Google Cloud Platform and costs 0$. Complete code is here https://github.com/zdenulo/bigquery_public_datasets_metadata.

Cloud Scheduler

Cloud Scheduler regularly triggers topic on Cloud Pub/Sub which executes Cloud Function where main part is happening (reading info from BigQuery and writing back). Cloud Scheduler could trigger Cloud Fnction straigth via HTTP request but it's triggering instead Pub/Sub because this way, Cloud Function is not exposed to internet but can be only triggered via Pub/Sub. Little security detail.

Command to create job in Cloud Scheduler which will trigger PubSub topic is:

gcloud alpha scheduler jobs create pubsub bq-public-dataset-job --schedule="0 */4 * * *" --topic="bq-ingest" --message-body=" "

with output:

pubsubTarget: data: IA== topicName: projects/adventures-on-gcp/topics/bq-ingest retryConfig: maxBackoffDuration: 3600s maxDoublings: 16 maxRetryDuration: 0s minBackoffDuration: 5s schedule: 0 */4 * * * state: ENABLED timeZone: Etc/UTC userUpdateTime: '2019-01-14T22:32:59Z'

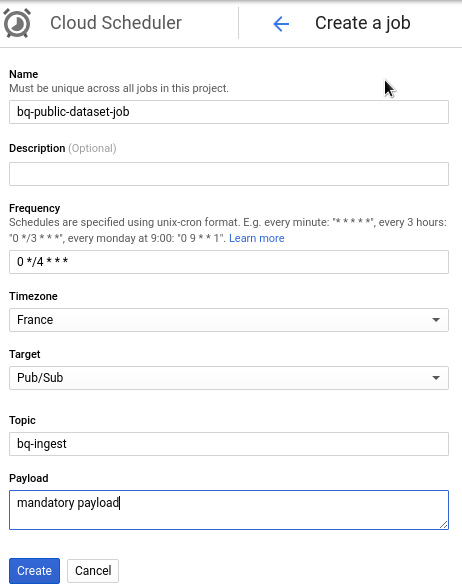

Or with web UI:

Based on these settings, Cloud Scheduler will publish message to PubSub every 4 hours on full hour. Minor detail, that when I was creating scheduler job via gcloud, content of pubsub message (payload) could be one space " " (it doesn't accept empty string). When I did it through Web UI, I need to write some text.

PubSub

Next step is to create PubSub topic which was defined in Cloud Scheduler settings.

gcloud pubsub topics create bq-ingest

with output:

Created topic [projects/adventures-on-gcp/topics/bq-ingest].

Cloud Function

Next step is to create and upload Cloud Function which will be run whenever message is publish to PubSub topic we created in Cloud Scheduler. Code is on Github and main script consists of 3 parts. Fisrt is to get data from public datasets, second is to upload that data into Google Cloud Storage and third is to initiate job to upload data from that file into BigQuery.

From folder code is uploaded to Cloud Functions via gcloud:

gcloud functions deploy bq_public_metadata --runtime python37 --trigger-topic bq-ingest --timeout 540

I selected runtime to be Python 3.7 since I have script written in Python, next is PubSub topic I created in previous step. Lastly I am setting timeout to 540 seconds since script takes longer to get data so that function doesn't time out. bq_public_metadata is the name of cloud function as well as name of function in main.py.

And that's all.