Using system packages and custom binaries in Google Cloud Functions.

Google Cloud Functions is a serverless compute platform on Google Cloud Platform. Its main principle is: "Write code in NodeJS, Python or Go and deploy it and don't worry about anything else". This flow is very well suited for small code, a simple function that responds to HTTP requests or various events from PubSub, Cloud Storage, Firestore, etc. Although there is no restriction to install any third-party library for a relevant programming language with a package manager (npm, pip), sometimes even that is not enough. Cloud Functions are built on top of the Ubuntu 18.04 image and provide some useful system packages, a feature that is not often mentioned and this is the topic I want to focus on in this article. I came to this personally when I was working on a project for a client who wanted to do a conversion from Adobe file format and request was to do it with Cloud Functions.

Disclaimer: Google Cloud offers Cloud Run which is a serverless container platform and allows to install any package/library/program within Docker container and it's more suitable when you want to use distro packages or run your own custom software, but Cloud Functions feel more lightweight and thus can be more attractive in some situations.

Like I mentioned, Cloud Functions runtimes have preinstalled system packages https://cloud.google.com/functions/docs/reference/python-system-packages. Because Cloud Functions runtimes are based on Ubuntu 18.04 image, it's like having bellow your function Ubuntu sandbox.

I set up GitHub repo and some Cloud Functions written in Python 3.7 as examples https://github.com/zdenulo/google-cloud-functions-system-packages.

Exploring runtime

To execute system commands in the Python program, I am using the subprocess module. A general use case is as follows:

import subprocess

cmd = "ls -ltr /".split(' ')

p = subprocess.Popen(cmd, stderr=subprocess.PIPE, stdout=subprocess.PIPE)

stdout, stderr = p.communicate()

if stderr:

print(f"there was error {stderr}")

if stdout:

print(f"result: {stdout}")A command to be executed is defined in variable cmd and then output regular output in stdout and if there is some error in stderr variable.

With cloud function cf_system, I am executing several commands to find out what are environmental variables as well as executables in PATH variable. For example, these are environmental variables:

{'CODE_LOCATION': '/user_code',

'DEBIAN_FRONTEND': 'noninteractive',

'ENTRY_POINT': 'main',

'FUNCTION_IDENTITY': '[email protected]',

'FUNCTION_MEMORY_MB': '256',

'FUNCTION_NAME': 'system',

'FUNCTION_REGION': 'us-central1',

'FUNCTION_TIMEOUT_SEC': '60',

'FUNCTION_TRIGGER_TYPE': 'HTTP_TRIGGER',

'GCLOUD_PROJECT': 'gcp',

'GCP_PROJECT': 'gcp',

'HOME': '/tmp',

'LC_CTYPE': 'C.UTF-8',

'NODE_ENV': 'production',

'PATH': '/env/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin',

'PORT': '8080',

'PWD': '/user_code',

'SUPERVISOR_HOSTNAME': '169.254.8.129',

'SUPERVISOR_INTERNAL_PORT': '8081',

'VIRTUAL_ENV': '/env',

'WORKER_PORT': '8091',

'X_GOOGLE_CODE_LOCATION': '/user_code',

'X_GOOGLE_CONTAINER_LOGGING_ENABLED': 'false',

'X_GOOGLE_ENTRY_POINT': 'main',

'X_GOOGLE_FUNCTION_IDENTITY': '[email protected]',

'X_GOOGLE_FUNCTION_MEMORY_MB': '256',

'X_GOOGLE_FUNCTION_NAME': 'system',

'X_GOOGLE_FUNCTION_REGION': 'us-central1',

'X_GOOGLE_FUNCTION_TIMEOUT_SEC': '60',

'X_GOOGLE_FUNCTION_TRIGGER_TYPE': 'HTTP_TRIGGER',

'X_GOOGLE_FUNCTION_VERSION': '2',

'X_GOOGLE_GCLOUD_PROJECT': 'gcp',

'X_GOOGLE_GCP_PROJECT': 'gcp',

'X_GOOGLE_LOAD_ON_START': 'false',

'X_GOOGLE_SUPERVISOR_HOSTNAME': '169.254.8.129',

'X_GOOGLE_SUPERVISOR_INTERNAL_PORT': '8081',

'X_GOOGLE_WORKER_PORT': '8091'}and this is part of the list of executables:

-rwxr-xr-x 1 root root 142608 Apr 24 2019 ffserver -rwxr-xr-x 1 root root 161944 Apr 24 2019 ffprobe -rwxr-xr-x 1 root root 137376 Apr 24 2019 ffplay -rwxr-xr-x 1 root root 272528 Apr 24 2019 ffmpeg -rwxr-xr-x 1 root root 43168 May 7 2019 apt-mark -rwxr-xr-x 1 root root 27391 May 7 2019 apt-key -rwxr-xr-x 1 root root 43168 May 7 2019 apt-get -rwxr-xr-x 1 root root 22616 May 7 2019 apt-config -rwxr-xr-x 1 root root 22688 May 7 2019 apt-cdrom -rwxr-xr-x 1 root root 80032 May 7 2019 apt-cache -rwxr-xr-x 1 root root 14424 May 7 2019 apt lrwxrwxrwx 1 root root 0 May 7 2019 ps2txt -> ps2ascii -rwxr-xr-x 1 root root 669 May 7 2019 ps2ps2 -rwxr-xr-x 1 root root 647 May 7 2019 ps2ps -rwxr-xr-x 1 root root 1097 May 7 2019 ps2pdfwr -rwxr-xr-x 1 root root 2752 May 7 2019 ps2epsi -rwxr-xr-x 1 root root 631 May 7 2019 ps2ascii -rwxr-xr-x 1 root root 395 May 7 2019 printafm -rwxr-xr-x 1 root root 404 May 7 2019 pphs -rwxr-xr-x 1 root root 516 May 7 2019 pfbtopfa -rwxr-xr-x 1 root root 498 May 7 2019 pf2afm -rwxr-xr-x 1 root root 909 May 7 2019 pdf2ps -rwxr-xr-x 1 root root 698 May 7 2019 pdf2dsc -rwxr-xr-x 1 root root 277 May 7 2019 gsnd -rwxr-xr-x 1 root root 350 May 7 2019 gslp

As you can see from the list (not complete) there are various programs like ffmpeg (video/audio conversion) or various ps2* (postscript conversion), awk, sed, grep and many more. In the next section, we'll see some practical examples of usage of one of these programs.

The last case which I implemented during my investigation of the Cloud Function system and executing commands there is executing an arbitrary command (pipes don't work). When I set for option parameter in my cloud function command apt list --installed I'll get a list of installed packages.

Listing... adduser/bionic,now 3.116ubuntu1 all [installed] adwaita-icon-theme/bionic,now 3.28.0-1ubuntu1 all [installed,automatic] apt/bionic-updates,now 1.6.11 amd64 [installed] aspell/bionic,now 0.60.7~20110707-4 amd64 [installed,automatic] aspell-en/bionic,now 2017.08.24-0-0.1 all [installed,automatic] at-spi2-core/bionic,now 2.28.0-1 amd64 [installed,automatic] base-files/bionic-updates,now 10.1ubuntu2.4 amd64 [installed] base-passwd/bionic,now 3.5.44 amd64 [installed] bash/bionic-updates,now 4.4.18-2ubuntu1.1 amd64 [installed] bsdutils/bionic-updates,now 1:2.31.1-0.4ubuntu3.3 amd64 [installed] bzip2/bionic,now 1.0.6-8.1 amd64 [installed] ca-certificates/bionic,bionic-updates,now 20180409 all [installed] coreutils/bionic,now 8.28-1ubuntu1 amd64 [installed] cpp/bionic-updates,now 4:7.4.0-1ubuntu2.2 amd64 [installed,automatic] cpp-7/bionic-updates,now 7.4.0-1ubuntu1~18.04 amd64 [installed,automatic] curl/bionic-updates,bionic-security,now 7.58.0-2ubuntu3.7 amd64 [installed

There are probably other things to look and discover, but let's go to some practical use cases.

Conversion of PDF document to images.

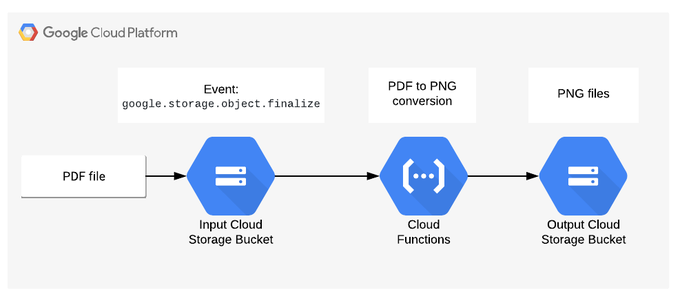

This example implements the following process: When a PDF file is uploaded to Cloud Storage bucket, it triggers Cloud Function which does the conversion of PDF document to PNG images (one page to one image) and stores them to output Storage bucket, which can then be further processed, for example doing OCR. Here is a nice diagram that represents the whole process.

If we would like to do this in pure Python, that wouldn't be possible. Luckily Ghostscript program saves the day. Of course, it's preinstalled in Cloud Functions and to do a conversion from PDF to PNG (or other image formats) following command is used:

gs -dSAFER -dNOPAUSE -dBATCH -sDEVICE=png16m -r600 -sOutputFile="output-%d.png" input.pdf'

Full Cloud Function code:

def main(data, context=None):

gcs = storage.Client(project=os.environ['GCP_PROJECT'])

bucket_name = data['bucket']

file_name = data['name']

if file_name[-4:] != '.pdf':

logging.error("input file is not pdf")

return

input_filepath = os.path.join(TMP_FOLDER, file_name)

bucket = gcs.bucket(bucket_name)

blob = bucket.blob(file_name)

blob.download_to_filename(input_filepath)

output_filename = file_name.rsplit('.', 1)[0]

output_filename += '-%d'

output_filename += '.png'

output_filepath = os.path.join(TMP_FOLDER, output_filename)

cmd = f'gs -dSAFER -dNOPAUSE -dBATCH -sDEVICE=png16m -r600 -sOutputFile="{output_filepath}" {input_filepath}'.split(

' ')

p = subprocess.Popen(cmd, stderr=subprocess.PIPE, stdout=subprocess.PIPE)

stdout, stderr = p.communicate()

error = stderr.decode('utf8')

if error:

logging.error(error)

return

for filename in os.listdir(TMP_FOLDER):

if filename[-4:] == '.png':

full_path = os.path.join(TMP_FOLDER, filename)

output_bucket = gcs.bucket(OUTPUT_BUCKET)

output_blob = output_bucket.blob(filename)

output_blob.upload_from_filename(full_path)

logging.info(f'uploaded file: {filename}')

os.remove(full_path)

if os.path.exists(input_filepath):

os.remove(input_filepath)

returnSince Cloud Function is triggered by an event from Cloud Storage, in incoming data, the name of bucket and file is provided, so based on that, file from cloud storage is downloaded, then conversion is executed by calling gs command and results are saved to /tmp folder. Upon upload of output files to output storage bucket, files in /tmp folder are deleted as well as input file to clean up everything. Since Cloud Functions support maximally one concurrent request, it cannot happen that files from various requests will be mixed in the same instance during processing, but the same instance can process next request, which means that instance is not created but existing one is used and that's why input and output files are deleted from /tmp folder.

This is a great example of a Cloud Functions use case: a simple and straightforward example with a few lines a code doing one thing (actually 3 things: downloading, conversion, uploading).

Executing custom program in Cloud Function

In the previous example, we used a preinstalled Ghostscript program in the Cloud Function environment. But of course, there is always some edge case when we want to use our own compiled program which is not available in the system nor in a language we are using. For this example, we will use text to ASCII converter, i.e. in GET HTTP request, there is a text parameter where input text should be defined and as a result, ASCII test will be returned. I know that there is a Python library for this, but for the sake of example, I'll use a program called figlet. The full code for this example is here https://github.com/zdenulo/google-cloud-functions-system-packages/tree/master/cf_ascii. Figlet is a program written in C, so in order to use it, I'll need to compile it to get binary executable. The process is the following:

Source code can be downloaded from ftp://ftp.figlet.org/pub/figlet/program/unix/figlet-2.2.5.tar.gz on your local computer.

Then unpacked and compiled (on Linux OS):

tar -zxvf figlet-2.2.5.tar.gz cd figlet-2.2.5/ make

If everything goes well, this should create figlet executable. There can be issues with permissions when executing this file in Cloud Function, especially if compile code on Windows so just in case execute this command:

chmod a+x figlet

A common command to execute is:

./figlet -d fonts hello

which produces output

_ _ _

| |__ ___| | | ___

| '_ \ / _ \ | |/ _ \

| | | | __/ | | (_) |

|_| |_|\___|_|_|\___/

In order to use this in Cloud function, figlet executable as well fonts folder need to be copied to the Cloud Function folder and needs to be uploaded together with Cloud Function code. The code itself is basically a wrapper to execute figlet and return output.

def main(request):

text = request.args.get('text', '')

if not text:

return 'missing text parameter', 404

logging.info(f'received url: {text}')

cmd = f"./figlet -d fonts {text}".split(' ')

p = subprocess.Popen(cmd, stderr=subprocess.PIPE, stdout=subprocess.PIPE)

stdout, stderr = p.communicate()

error = stderr.decode('utf8')

if error:

return error, 403

out = stdout.decode('utf8')

response = make_response(out)

response.headers["content-type"] = "text/plain"

return responseCloud Run could be used as well, but since we're doing one time compiling and need to wrap everything in a few lines of code, Cloud Function works as well. So in this example with took the source of C program, compiled it on a local computer, and uploaded binary together with Cloud Function code and where it's used. With this we've got simple API that reads the input text and converts to ASCII text.

In this article, I showed possibilities of using various programs beyond what Cloud Function runtimes are providing. I guess in 90% of Cloud Functions you won't need this but for that 10%, it can be useful.