Exporting data from Firebase (Firestore) to BigQuery

Firebase is a popular platform for web and mobile app development. The main database option when developing applications on Firebase platform is the NoSQL database Cloud Firestore which is also part of Google Cloud Platform. Since Firestore is a NoSQL database, there are different considerations regarding the modeling and design of relationships in comparison with SQL databases. The weaker part of NoSQL databases is limited analytical capabilities. Here BigQuery comes into play since it's a serverless data warehouse designed specifically for OLAP analysis. Question is how to get data from Firestore to BigQuery?

In this article, I want to describe two approaches. One is importing of full batch Firestore export and the second approach is online sync between Firestore and BigQuery. Of course, depending on the Firestore modeling, lots of analytical data can be extracted by using counters, or simple queries in Firestore, but nothing beats straightforwardness of SQL :)!

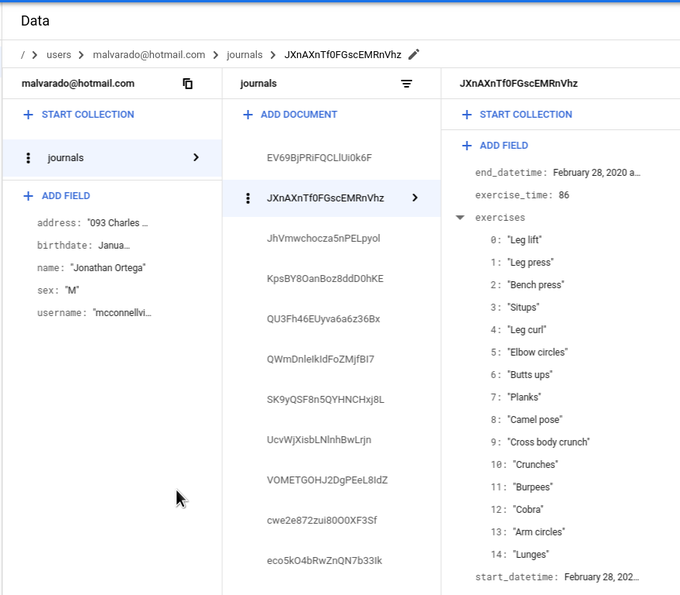

Data

All code samples and scripts are in this repository https://github.com/zdenulo/firestore2bigquery. As a data source, I will use the fictional YAFTA app (Yet Another Fitness Tracking App) which contains collections of Users (with basic info like username, email, sex) and this collection contains subcollection of Journals where every exercise is recorded. Every journal entry contains start/end time of exercise and a list of what kind of exercises were done. I created a script to generate random data and insert it into Firestore which can be found here. I uploaded a couple of hundred users and each one having dozen on journal entries.

Full export

Firestore supports export/import operations of collections to Cloud Storage and BigQuery supports the import od these exports. We just need to put this all together.

1) Create/select bucket

where you will do Firestore export, in my case, bucket will be called f2b-exports

gsutil mb -p $GCP_PROJECT gs://f2b-exports

2) Export Firestore collections

gcloud firestore export gs://f2b-exports/export-01 --collection-ids=users,journals

I set path 'export-01 in a bucket, otherwise, it would be datetime timestamp. It prints the following output:

Waiting for [projects/zdenky-15ba5/databases/(default)/operations/ASAyNjkxMzMwOTMyChp0bHVhZmVkBxJsYXJ0bmVjc3Utc2Jvai1uaW1kYRQKLRI] to finish...done. metadata: '@type': type.googleapis.com/google.firestore.admin.v1.ExportDocumentsMetadata collectionIds: - users - journals operationState: PROCESSING outputUriPrefix: gs://f2b-exports/export-01 startTime: '2020-03-20T10:49:24.973411Z' name: projects/zdenky-15ba5/databases/(default)/operations/ASAyNjkxMzMwOTMyChp0bHVhZmVkBxJsYXJ0bmVjc3Utc2Jvai1uaW1kYRQKLRI

At the time when this was printed, the job was completed although it printed that it is in the state of PROCESSING. For bigger collections it is good to use --async flag to do it asynchronously. To get info about the operation, the following command can be used:

gcloud firestore operations describe ASAyNjkxMzMwOTMyChp0bHVhZmVkBxJsYXJ0bmVjc3Utc2Jvai1uaW1kYRQKLRI

done: true

metadata:

'@type': type.googleapis.com/google.firestore.admin.v1.ExportDocumentsMetadata

collectionIds:

- users

- journals

endTime: '2020-03-20T10:49:41.312303Z'

operationState: SUCCESSFUL

outputUriPrefix: gs://f2b-exports/export-01

progressBytes:

completedWork: '8222613'

progressDocuments:

completedWork: '14702'

estimatedWork: '12928'

startTime: '2020-03-20T10:49:24.973411Z'

name: projects/zdenky-15ba5/databases/(default)/operations/ASAyNjkxMzMwOTMyChp0bHVhZmVkBxJsYXJ0bmVjc3Utc2Jvai1uaW1kYRQKLRI

response:

'@type': type.googleapis.com/google.firestore.admin.v1.ExportDocumentsResponse

outputUriPrefix: gs://f2b-exports/export-01

3) Import data into BigQuery

We need to import each collection one by one into a separate BigQuery table. I'll import into dataset 'f2b' and tables will have the same name as collections.

bq load --source_format=DATASTORE_BACKUP f2b.users gs://f2b-exports/export-01/all_namespaces/kind_users/all_namespaces_kind_users.export_metadata Waiting on bqjob_r4f696fee02205f3e_00000170f79ef5c8_1 ... (1s) Current status: DONE

bq load --source_format=DATASTORE_BACKUP f2b.journals gs://f2b-exports/export-01/all_namespaces/kind_journals/all_namespaces_kind_journals.export_metadata Waiting on bqjob_r1fffa3bf31a8debd_00000170f7a0ed20_1 ... (10s) Current status: DONE

In the Github repository, there is a Python code that can be used to deploy Cloud Functions or with little adjustments, it can be used as a script to do these 3 steps.

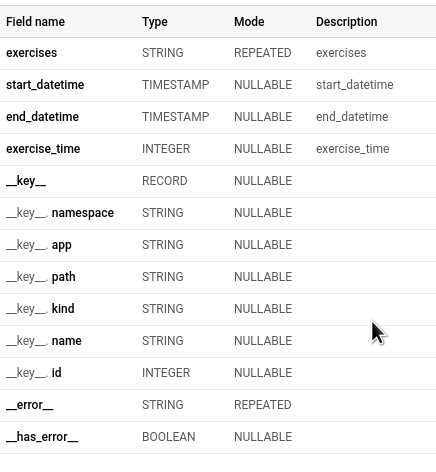

BigQuery schema and queries

When importing data, Bigquery converts Firestore data types into its' native types plus it adds several fields grouped into__key__ Record, from which the most useful are __key__.name which contains the key of a document and __key__.path which contains the full path for a document. This is necessary when joining tables (collections). On the image below is a schema for the 'journals' table.

Now we can do whatever SQL queries come to our mind. I guess in the context of this data, we would like to join users and journals and do analytics on top of that. Here it gets a little trickier. Column__key__.name path in subcollection (in this case table journals) contains string value like this:

"users", "[email protected]", "journals", "ZPs9vH2mzR58MFpTQzqc", i.e. structure:

"<parent collection name>", "<parent document key>", "<subcollection name>", "<subcollection document key>".

In order to join this table with 'users' table, we need to extract "parent document key" and join on that value. As an example, here is a basic query that calculates the total number of exercise time per user.

WITH journals_data AS ( SELECT TRIM(REPLACE(SPLIT(__key__.path, ',')[OFFSET(1)], '"', '')) AS user_key, exercise_time FROM f2b.journals ) SELECT users.__key__.name AS email, users.name AS name, SUM(journals.exercise_time) AS total_time FROM journals_data AS journals JOIN f2b.users AS users ON users.__key__.name=journals.user_key GROUP BY email, name ORDER BY total_time DESC

In order to extract the key, we need to split the string, replace double quotes and trim. Besides that, everything else works usually like with any other BigQuery table based on my experience.

Few things to keep in mind when using this approach:

- it's always full export from Firestore, you can't limit by query or similar. This counts towards Firestore billing since every Firestore operation is billed.

- you need to explicitly define which collections/subcollections you want to export like in the example. If you don't specify collections, Firestore make full export which cannot be imported in BigQuery

- for BigQuery import it's possible to specify concrete fields/columns you want to import

- Firestore export can be queried without exporting, by creating External Table in BigQuery

- This approach is good when you don't need very often to query data and you're ok to do full export every time (depends on the amount of Firestore data and your budget)

Official documentation for Firestore Export/Import and BigQuery import.

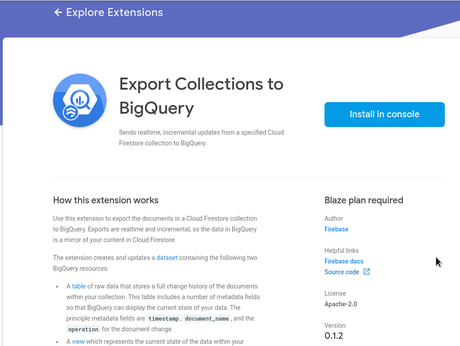

Syncing between Firestore and BigQuery.

This approach relies on triggering Cloud Function whenever there is a change in a Firestore collection and streaming those changes into BigQuery table. This way you have the same data in BigQuery as you have in Firestore. Firebase offers an automated way to set this up using the "Export Collections to BigQuery" Firebase Extension.

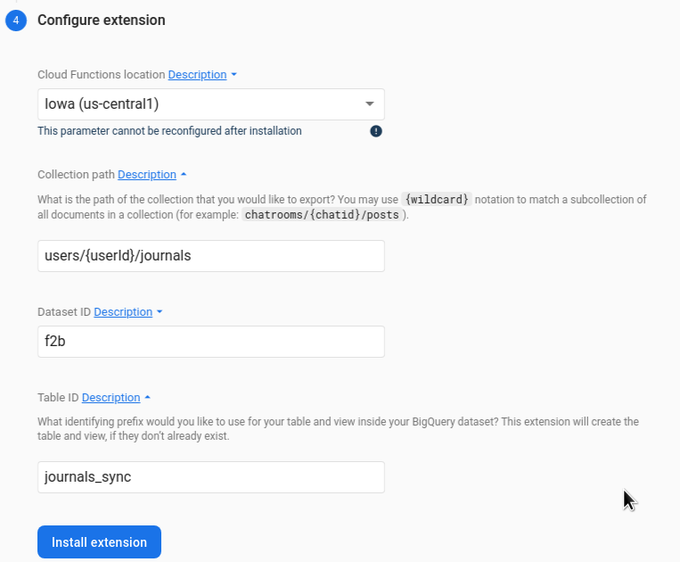

As I mentioned, besides inserting data into BigQuery, it creates automatically a table where data is inserted and it also creates a view based on the table to get only the most recent data for documents. In order to set up Firebase extension, you need to login to Firebase (and in case you're not using it, import GCP project there). On this URL https://firebase.google.com/products/extensions/firestore-bigquery-export is a description and documentation regarding this extension. So you just need to click the button "Install in console" to set it up via Firebase UI or using Firebase CLI.

In total there are 4 steps in the setup. As the first step it sets a Cloud Function, reviewing billing setup, creates a service account with BigQuery Data Editor role (if necessary) and in the last step, you set a collection path for Firebase collection as well as BigQuery dataset and table name where data will be exported.

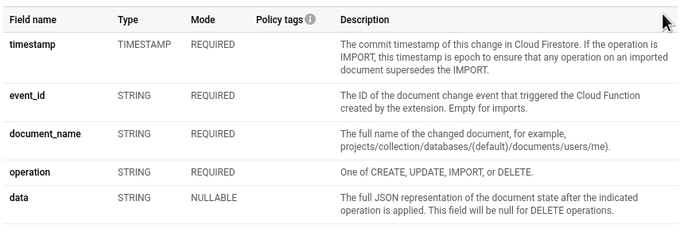

After this, it takes several minutes to deploy Cloud Function. A table in BigQuery is created upon the first execution of Cloud Function and insert to BigQuery. There is the table in BigQuery ending with "_raw_changelog", in my case, it's "journals_sync_raw_changelog". This is where all changes are stored. Every single update, delete, insert. Basically in this table, you have the whole history of changes for your collection. Schema is rather simple and always the same:

In column "data" there is the whole document in JSON representation and field "document_name" contains the full path of document key.

To get always the most recent data (and exclude deleted one), a view based on the "raw_changelog" table is created automatically as well, its' name ends with "_raw_latest", in a case from above it's "journals_sync_raw_latest".

By installing the extension, you set the streaming of changes from your Firestore collection to BigQuery. But if you are starting with some collection where there is already data in Firestore? For that, you need to execute a script to upload initial changes to BigQuery as well.

Upload of existing documents

You can go to installed extension details by visiting URL https://console.firebase.google.com/u/0/project/<project-id>/extensions or by clicking on Extensions button in Firebase menu.

When you click on "How this extension works" you'll see a detailed overview of how this extension works. On the bottom of the description, there is a URL that leads to Github repository where there is a description of how importing of existing documents should be done. I should emphasise that at the moment (April 2020) script doesn't support directly import of subcollections. In issues, there is mentioned workaround but it involves modifying source code, so I won't describe it here.

Importing existing documents with a script

To import collection, steps are the following:

in case you are not authenticated for Cloud SDK, you need to execute:

gcloud auth application-default login

then when the next command is executed (this needs npm/NodeJS installed), it downloads necessary packages and starts with interactive settings. Here you will need to enter your project id, collection path (same as when setting up extension), BigQuery dataset and table prefix (without _raw_changes). This how it worked for me. Lines starting with ? are the ones where you need to enter values.

npx @firebaseextensions/fs-bq-import-collection ? What is your Firebase project ID? zdenky-15ba5 ? What is the path of the the Cloud Firestore Collection you would like to import from? (This may, or may not, be the same Collection for which you plan to mir ror changes.) users ? What is the ID of the BigQuery dataset that you would like to use? (A dataset will be created if it doesn't already exist) f2b ? What is the identifying prefix of the BigQuery table that you would like to import to? (A table will be created if one doesn't already exist) users ? How many documents should the import stream into BigQuery at once? 300 Importing data from Cloud Firestore Collection: users, to BigQuery Dataset: f2b, Table: users_raw_changelog BigQuery dataset already exists: f2b BigQuery table with name users_raw_changelog already exists in dataset f2b! View with id users_raw_latest already exists in dataset f2b. Inserting 300 row(s) of data into BigQuery Inserted 300 row(s) of data into BigQuery Inserting 211 row(s) of data into BigQuery Inserted 211 row(s) of data into BigQuery --------------------------------------------------------- Finished importing 511 Firestore rows to BigQuery ---------------------------------------------------------

As expected data is imported to a table. The timestamp is as Unix epoch time 0, i.e. 1970-01-01 00:00:00 which secures that if there was some change in meanwhile (and uploaded via Cloud Function) it's not overridden with initial import. The operation in a table is marked as "IMPORT".

As it's noted in the documentation, an operation for this is O(collection size), so if you have rather a large collection it would be more reliable and faster to do full export to BigQuery as it's described in the first part of this article and also if you have a subcollection which you want to import.

Importing existing documents from batch export in BigQuery

In this case, an additional step is needed and that is to convert columns from batch export to JSON data. In the next example, I'll demonstrate how it looks like in the case of 'journals' subcollection. For a reminder, journals subcollection has four fields: start_datetime (timestamp), end_datetime (timestamp), exercise_time (integer), and exercises (list of strings). To insert data from Firestore batch export into 'journals_sync_raw_changelog' table where all changes are inserted, I wrote this query:

INSERT f2b.journals_sync_raw_changelog

SELECT TIMESTAMP('1970-01-01 00:00:00') as timestamp,

"" AS event_id,

CONCAT("projects/zdenky-15b5/databases/(default)/documents/users/", TRIM(REPLACE(SPLIT(__key__.path, ',')[OFFSET(1)], '"', '')), "/journals/", __key__.name) AS document_name,

"IMPORT" AS operation,

TO_JSON_STRING(

STRUCT( STRUCT(UNIX_SECONDS(start_datetime) AS _seconds, 0 AS _nanoseconds) as start_datetime,

STRUCT(UNIX_SECONDS(end_datetime) AS _seconds, 0 AS _nanoseconds) AS end_datetime,

exercise_time AS exercise_time,

exercises AS exercises)

) AS data

FROM f2b.journals

The most complex part here is to construct correctly subcollection document's key path and correctly format timestamp to adhere to Firestore exported format. With this query, the changelog table is populated and all data is exported.

To use data from a view containing the latest data, the data column needs to parsed from the JSON string. Unfortunately, JSON conversion in BigQuery at the moment is cumbersome, so it needs to be done explicitly and array deserialization needs to be done via User Defined Function. So to extract JSON data from 'journals' table, the query could look like this:

CREATE TEMP FUNCTION json_extract_arrayx(s STRING, key STRING) RETURNS ARRAY<STRING> LANGUAGE js AS """

try {

var xs = JSON.parse(s)[key];

return xs == null ? null : xs.filter((x,i) => x != null).map((x,i) => x.toString());

} catch (e) {

throw e + ', on input string s: ' + s;

}

""";

SELECT

TIMESTAMP_SECONDS(CAST (JSON_EXTRACT(JSON_EXTRACT(data, '$.start_datetime'), "$._seconds") AS INT64)) AS start_datetime,

TIMESTAMP_SECONDS(CAST (JSON_EXTRACT(JSON_EXTRACT(data, '$.end_datetime'), "$._seconds") AS INT64)) AS end_datetime,

json_extract_arrayx(data, 'exercises') AS exercises,

JSON_EXTRACT(data, '$.exercise_time') AS exercise_time

FROM `f2b.journals_sync_raw_changelog`

An alternative is to use UDF to deserialize there all JSON data when using in a view's query.

Conclusion

Batch export is suitable for a one-off or bigger collection export since the main part is done by export batch. In Github repo, there is an example of how to start batch export programmatically. This could be used in Cloud Scheduler and Cloud Function to do periodical exports.

Online sync with Cloud Functions looks like an obvious choice since it basically mirrors Firestore's collection state to BigQuery and you have a full history of data updates in BigQuery. Depending on the amount of data and usage, there can be additional costs for Cloud Functions and Firestore operations.