Connecting Cloud Functions with Compute Engine using Serverless VPC Access

Serverless products on Google Cloud Platform (GCP) such as Cloud Functions and App Engine due to their serverless nature (hidden server infrastructure) can connect to some of the GCP products (Compute Engine, Memory Store, Cloud SQL) only through their public IP addresses which of course is adding security risks by exposing them to the whole internet as well as adding latency, since traffic goes through public internet and not through the fast Google network. In most cases, there is an option for products to have just internal IP address which is not accessible from the internet but only from within GCP network, but so far it wasn't possible for serverless products to make connections that way due to the networking issues.

Recently GCP introduced Serverless VPC Access which is like a glue between serverless product and other products in VPC network. Basically with the creation of Serverless VPC Access Connector, under the hood f1-micro instances are created which are handling connections and transfers. Only requests from serverless instance to other servers are supported, it's not possible to make requests to the serverless instances from the other products via the internal network, only through public internet via an HTTP request.

At the moment Serverless VPC Access can be used for Cloud Functions and App Engine (not yet for managed Cloud Run) and it needs to be in the same region where serverless service is. Pricing at the moment is "as 1 f1-micro instance per 100 Mbps of throughput automatically provisioned for the connector" which should be about ~5$ per month, although it may increase since service is at the moment in Beta state.

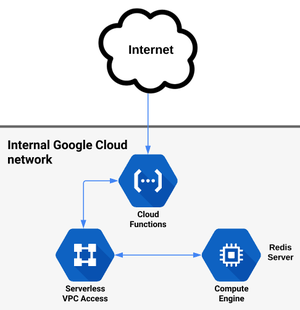

To demonstrate functionality, I will use Cloud Function written in Python which connects to Redis (deployed as a container on Compute Engine) which is used as a simple cache service.

When HTTP request comes to the Cloud Function, within the code, a request is made to Redis server which goes through Serverless VPC Access Connector to the Compute Engine with an internal IP address and then back as illustrated on the diagram above. This can be used as a simple cache service, although Cloud Memorystore is a similar managed product with more capabilities.

Repository with scripts and code is located on Github. There are several steps to be done to make this work:

1. Creating Compute Engine instance

We need to create a firewall rule which will allow access to Redis (default port 6379).

gcloud compute firewall-rules create allow-redis --network default --allow tcp:6379

Then create instance

gcloud compute instances create-with-container redis-cache \ --machine-type=f1-micro \ --container-image=registry.hub.docker.com/library/redis \ --tags=allow-redis \ --zone=us-central1-a \ --private-network-ip=10.128.0.2 \ --network default gcloud compute instances delete-access-config redis-cache

As I mentioned previously I am creating an instance based on Redis Docker container image which is hosted on Docker registry and automatically deployed on Compute Engine instance, I am applying firewall tag, explicitly assigning an internal IP address (based on region) and some mandatory stuff like machine type (f1-micro is for demonstration purpose, not real life uses case), zone. Now this instance has public IP as well but with the next command, I am removing it. Reason for this is when I create an instance without public IP connection doesn't work. When I create an instance with public IP and then remove it right away, it works ok. Maybe it's some temporary glitch or maybe I'm doing something wrong, but that's how it worked for me. The final state is that instance has only internal IP address.

2. Creating a Serverless VPC Access Connector

One time command to activate Serverless VPC Access API:

gcloud services enable vpcaccess.googleapis.com

A command to create Connector doesn't have many options:

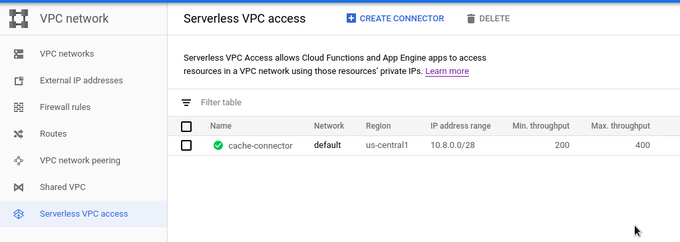

gcloud beta compute networks vpc-access connectors create cache-connector \ --network default \ --region us-central1 \ --range 10.8.0.0/28 \ --min-throughput 200 \ --max-throughput 400

Important is to place a connector in the same region where a serverless deployment is. I didn't figure out how important IP range is, but 10.8.0.0/28 as recommend during Web UI creation worked. After creation there are many options, i.e. there is only option to delete connector or to view basic data. In Cloud Console, Serverless VPC Access in under VPC Network section.

3. Deploying Cloud Function

Sample code used in Cloud Function looks like this:

import os

import datetime

import random

import redis

r = redis.StrictRedis(host=os.environ['REDIS_HOST'], decode_responses=True)

def main(request=None):

cache_key = datetime.datetime.now().minute

val = r.get(cache_key)

if not val:

val = random.random()

out = f'set value: {val}'

r.set(cache_key, val)

else:

out = f'value from cache: {val}'

r.delete(cache_key)

return outI am passing IP of Redis instance through environmental variable which is set during deployment and corresponds to the internal IP address of Compute Engine instance. Besides that I am getting, setting and deleting cache key to do interaction with the Redis database.

In order to deploy Cloud Function and that works with Connector, extra IAM roles need to be set for Cloud Functions Service Agent account, those are Project/Viewer and Compute/NetworkUser. Cloud Functions Service Agent account has usually email: service-<PROJECT-NUMBER>@gcf-admin-robot.iam.gserviceaccount.com

Commands are:

gcloud projects add-iam-policy-binding $PROJECT_ID \ --member=serviceAccount:[email protected] \ --role=roles/viewer gcloud projects add-iam-policy-binding $PROJECT_ID \ --member=serviceAccount:[email protected] \ --role=roles/compute.networkUser

To deploy Cloud Function VPC Connector needs to be defined in the following format: projects/<PROJECT_ID>/locations/<REGION>/connectors/CONNECTOR_NAME

VPC_CONNECTOR=projects/adventures-on-gcp/locations/us-central1/connectors/cache-connector gcloud beta functions deploy random-cache --entry-point main \ --runtime python37 \ --trigger-http \ --region us-central1 \ --vpc-connector $VPC_CONNECTOR \ --set-env-vars REDIS_HOST=10.128.0.2

Now when you hit Cloud Function URL you should get a response from Redis.

Finally, Serverless VPC Access should work as well with both the first and second generation of Standard App Engine runtimes for which configuration is adding Connector "path" as in case of Cloud Function to yaml config file.