BigQuery public datasets metadata

I created public BigQuery table which contains metadata about BigQuery public datasets. As it well known, BigQuery has public datasets containing data with various nature and size. For example, there are Bitcoin and Ethereum transactions, data from World Bank, data about patents, varios (mostly USA) agencies like Bereau of Labor or Forest statistics etc. Since they are public, that means that anybody with Google Cloud account can query them and will be charged only for amount of data queried, after 1TB of free monthly quota is consumed.

Public datasets are perfect resource if:

- You are starting with BigQuery and don't have proper data to play with and try out BigQuery functionalities

- You want to use data from public datasets for (serious) research or just for fun

Some resources about BigQuery public datasets can be found here:

https://cloud.google.com/bigquery/public-data/ - General guide how to start with BigQuery and public datasets

https://www.reddit.com/r/bigquery/wiki/datasets - List of public datasets and some information about them (source)

https://console.cloud.google.com/marketplace/browse?filter=solution-type:dataset - List of public datasets and comprehensive information as well as some sample queries.

The reason why I am writing this article is that I was looking through public datasets tables which are updated at least daily but from these available information I couldn't find easily. I've tried of course to look randomly in BigQuery into several tables to get info but most were not updated, and doing it manually it would take long time. Because each table contains some metadata like size, number of rows, date of creation / modification I wrote simple Python script to extract information from tables of public datasets and put it all into one table. Complete code is on Github https://github.com/zdenulo/bigquery_public_datasets_metadata

Core code which gets data is this one:

import datetime

import logging

from google.cloud import bigquery

from settings import *

projects = [

'bigquery-public-data',

]

def get_bq_data():

"""Gets metadata from public BigQuery datasets"""

data = []

for project in projects:

client = bigquery.Client(project=project)

datasets = client.list_datasets()

for dataset in datasets:

dataset_id = dataset.dataset_id

dataset_ref = client.get_dataset(dataset_id)

tables = client.list_tables(dataset_id)

for table in tables:

full_table_id = table.full_table_id.replace(':', '.')

table_ref = client.get_table(full_table_id)

item = {'dataset_id': dataset_id,

'project_id': project,

'table_id': table_ref.table_id,

'dataset_description': dataset_ref.description,

'table_modified': table_ref.modified.strftime("%Y-%m-%d %H:%M:%S"),

'table_created': table_ref.created.strftime("%Y-%m-%d %H:%M:%S"),

'table_description': table_ref.description,

'table_num_bytes': table_ref.num_bytes,

'table_num_rows': table_ref.num_rows,

'table_partitioning_type': table_ref.partitioning_type,

'table_type': table_ref.table_type,

}

data.append(item)

return dataSince there are other GCP projects beside 'bigquery-public-data' which have public data, I'm iterating through projects, then datasets and finally table. When BigQuery dataset is made public, all tables which belong to that dataset are public. I'm putting information into list of dictionaries. I'll describe whole process in some other article.

My public dataset which contains table with metadata is here: https://console.cloud.google.com/bigquery?p=adventures-on-gcp&d=bigquery_public_datasets&page=dataset

With this data, you can get some basic useful information about datasets:

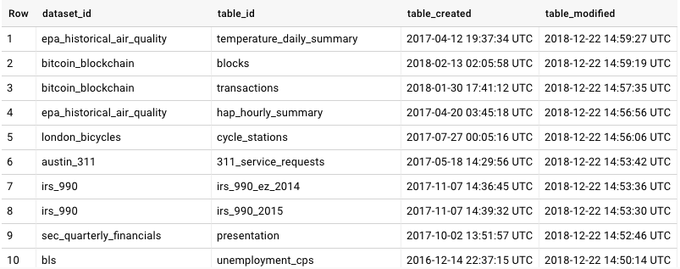

Get most recent updated tables

SELECT dataset_id, table_id, table_created, table_modified

FROM `adventures-on-gcp.bigquery_public_datasets.bq_public_metadata` ORDER BY table_modified DESC

Tables which were updated today

SELECT dataset_id, table_id, table_created, table_modified

FROM `adventures-on-gcp.bigquery_public_datasets.bq_public_metadata` WHERE CAST(table_modified AS DATE) = CURRENT_DATE() ORDER BY table_modified DESC

Out of 1499 tables, 108 were updated today

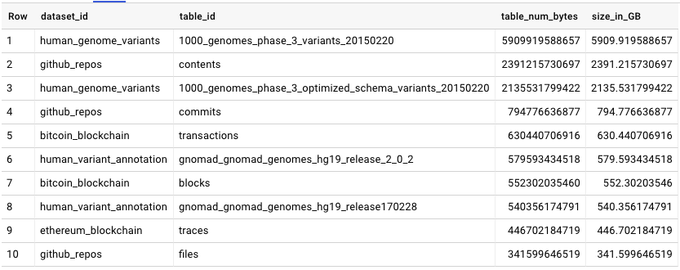

Tables with biggest size

SELECT dataset_id, table_id, table_num_bytes, table_num_bytes / 1000000000 AS size_in_GB FROM `adventures-on-gcp.bigquery_public_datasets.bq_public_metadata` ORDER BY table_num_bytes DESC LIMIT 10

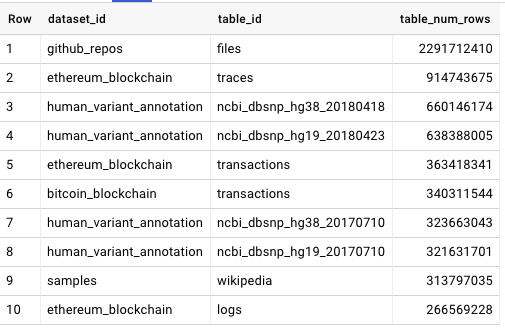

Tables with most number of rows

SELECT dataset_id, table_id, table_num_rows

FROM `adventures-on-gcp.bigquery_public_datasets.bq_public_metadata` ORDER BY table_num_rows DESC LIMIT 10

Whole pipeline is nice example of serverless processing and consists of Cloud Function which is triggered by Pub/Sub from Cloud Scheduler (at the moment every 4 hours). In Cloud Function data is obtained from BigQuery, then stored in file into Google Cloud Storage in JSON format and finally loaded into BigQuery. Content of table is every time overwritten.

Hope this will give you additional insights and place to find about BigQuery Public Datasets.