Backup database to Google Cloud Storage

I have a few small websites hosted on the Digital Ocean server. Each of them has a MySQL database and I wanted to regularly set uploading full database export to some independent location outside of Digital Ocean to sleep more calmly. Since my life revolves around Google Cloud, my preference for storage was clear. The goal is to use crontab to periodically run a bash script to execute MySQL database export and gsutil command to copy a file to the Cloud Storage bucket. To configure the whole process there are several steps involved, which I will describe in this article. I interactions with the Google Cloud project, I am using web UI, although all steps could be done via the command line interface.

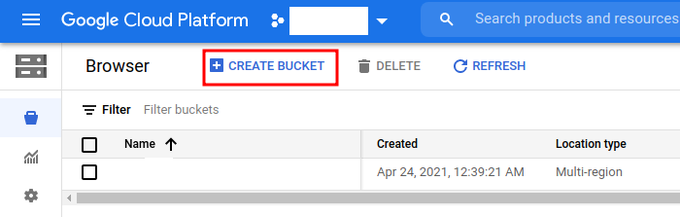

1. Create a Cloud Storage bucket for backups

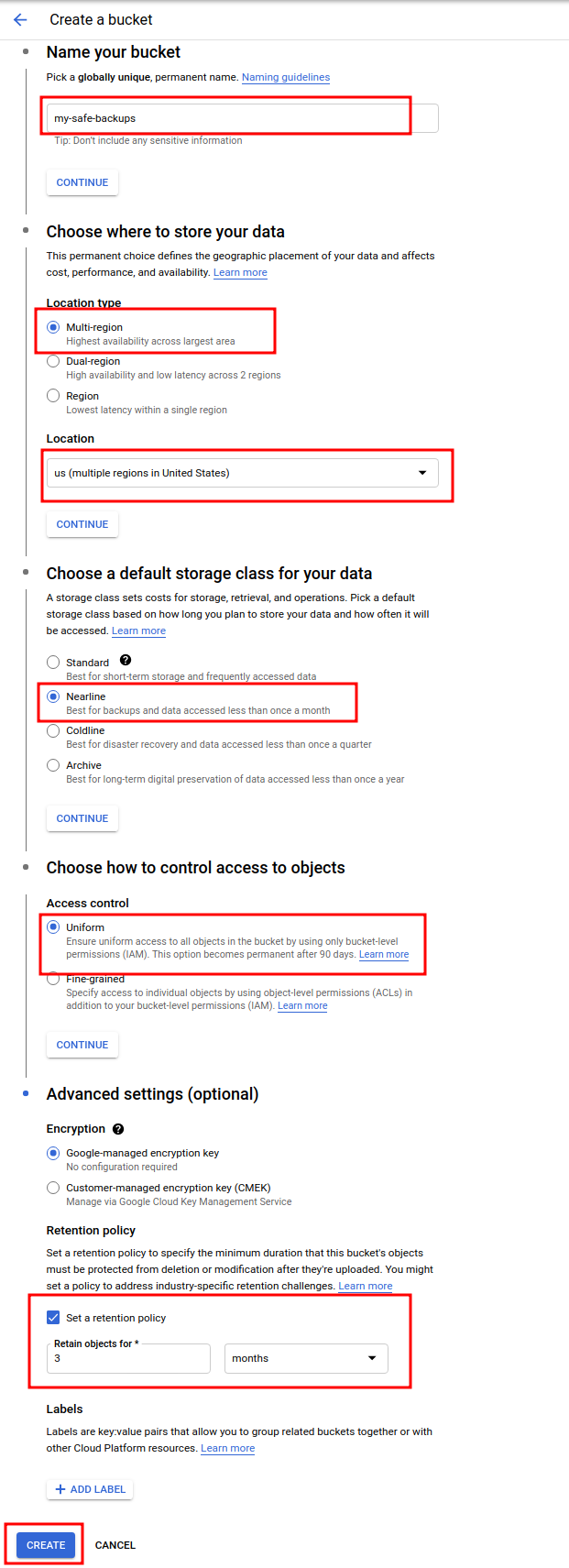

The first step is to create a Cloud Storage bucket where backup files will be stored. In Cloud Console, we go to the Cloud Storage section and click on the button Create bucket.

There are a few things that need to be set. Since this is a backup bucket, I'm setting the storage class to be Nearline (instead of Standard) since this one is a design for not-so-frequent access and it's a bit cheaper (data access is billed as well). I also set a Retention policy to keep objects only for the last 3 months.

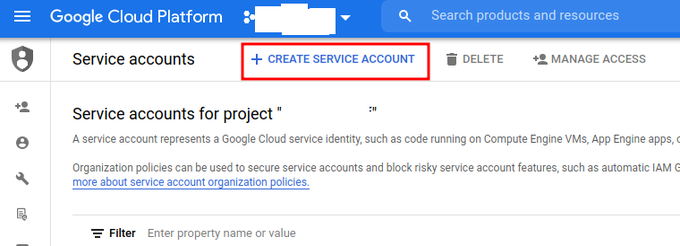

2. Create a Service Account

Since this operation is done on a server (outside of Google Cloud) I will use a Service Account for authentication and access to the Google Cloud project.

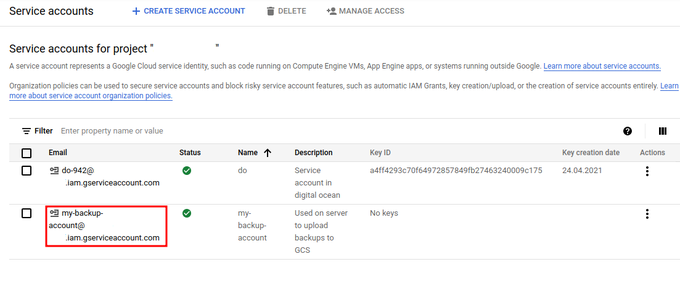

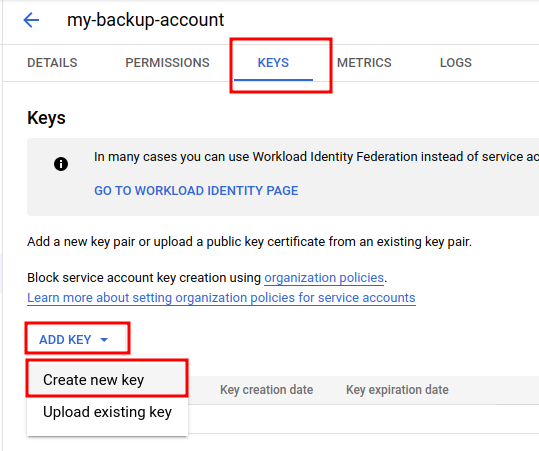

The next step is to download the key file. On the list of service accounts, click on the one you created.

Switch to tab "KEYS" and then click on "ADD KEY" and then "Create new key", the file will be auto-downloaded.

Your internet browser will download the file.

The last step is to copy this file to the server, I'm using scp command:

scp service_account.json <myusername>@<SERVER>

3. Set access for the Service Account to the bucket

Since the script will upload the database export file to the bucket, I need to set correct permissions for the Service Account for the backup Storage bucket, no other access/roles are required for this Service Account for now.

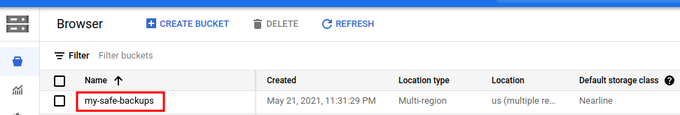

To set access for the Cloud Storage bucket, in the list of Cloud Storage buckets, click on the relevant bucket name.

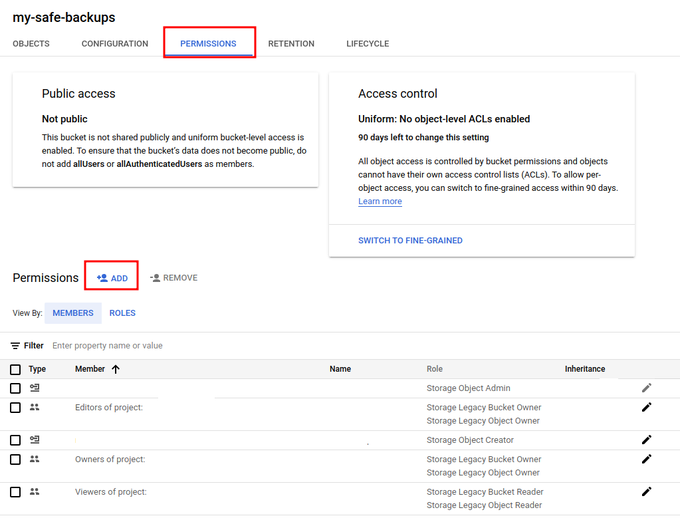

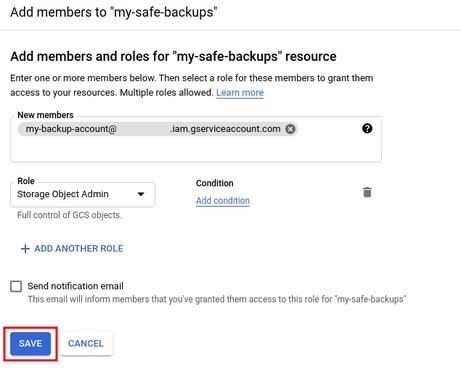

Then switch to the Permissions tab and click Add button to add access to the service account.

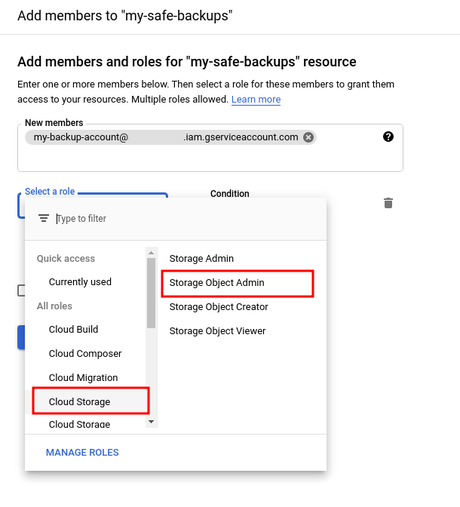

To the "new members" field I'm adding the full email address of the Service Account "my-backup-account@*.iam.gserviceaccount.com"

The minimal role is Storage Object Admin (under Cloud Storage section). Note: Storage Object Creator is not sufficient since when using gsutil command, it's using "list" operation for the bucket as well (for some reason).

Saving settings with the SAVE button.

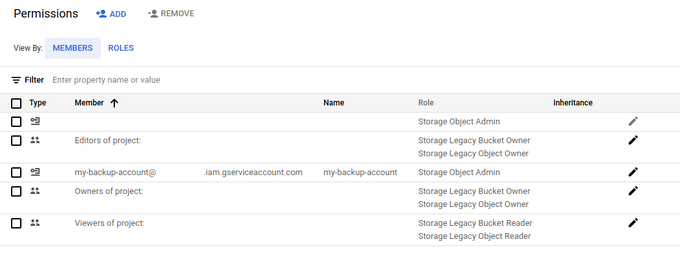

After the service account is added to the bucket, it's listed in the "members" list.

With this process, access is granted only for this bucket, Service Account won't have access to other Cloud Storage buckets.

3. Download and installation of Google Cloud SDK on the server

There are a few ways to install Google Cloud SDK. One way is to install it straight as an OS package. The approach I am using consists of install from the source files.

Installation of Google Cloud SDK is based on these steps https://cloud.google.com/sdk/docs/install#linux. For brevity:

curl -O https://dl.google.com/dl/cloudsdk/channels/rapid/downloads/google-cloud-sdk-337.0.0-linux-x86_64.tar.gz tar -zxvf google-cloud-sdk-337.0.0-linux-x86_64.tar.gz ./google-cloud-sdk/install.sh

Depending on how you have other things configured, I needed to do this extra step:

As the default version on my server, Python 2.7 is set and Google Cloud SDK has an issue with it, so I'm using pyenv to support multiple Python versions. In order to point Google Cloud SDK to my desired Python version, I'm setting the environmental variable CLOUDSDK_PYTHON in my bash_profile to point to pyenv's Python3 interpreter.

export CLOUDSDK_PYTHON=/home/ubuntu/.pyenv/shims/python3

4. Authentication to Cloud SDK with the service account.

To authenticate gcloud with a Service Account which I created and downloaded, I used this command

gcloud auth activate-service-account [email protected] --key-file=/<PATH TO KEY>/service_account.json

If everything is ok, it prints the following message:

Activated service account credentials for: [[email protected]]

Now we can use Cloud SDK tools like gcloud and gsutil to communicate with the Google Cloud project.

5. Bash script

The script backup.sh consists of dumping the database to a temporary folder from which is then uploaded to the Cloud Storage bucket:

#!/bin/bash

DUMP_FOLDER="/tmp"

GCS_BUCKET="my-safe-backups"

DT=$(date "+%Y%m%d")

function backup() {

database=$1

filename="${database}_${DT}.sql"

output_file="${DUMP_FOLDER}/${filename}"

echo $output_file

mysqldump "$database" >"$output_file"

if test -f "$output_file"; then

gsutil cp "$output_file" "gs://${GCS_BUCKET}/${database}/${filename}"

echo "${database} dump uploaded"

rm "${output_file}"

else

echo "No dump file ${output_file}"

fi

}

backup mydb_1

backup mydb_2

DUMP_FOLDER is a local path where database(s) will be exported, GCS_BUCKET is the name of the Cloud Storage bucket that was created in the first step.

Since I have multiple databases, for each one I am making a backup calling backup function with the database name as an input parameter.

6. Setting cron

I've set the backup script to be executed once per week.

10 0 * * 1 /home/ubuntu/bin/backup.sh

Conclusion

Since most of the databases have their own export command, this can be easily edited to use for backup as well.